Analysis of cognitive warfare and information manipulation in the Israel-Hamas war 2023

Introduction

Cognitive warfare, information warfare, and disinformation are increasingly being used to undermine democratic societies. These tactics can sow discord, weaken public trust in institutions, and influence public opinion. This article explores the cognitive warfare and information manipulation of the Israel-Hamas war in 2023 with AI technology, and empirical evidence indicates that these approaches were employed even before the conflict.

Taiwan AI Labs is the first open AI research institute in Asia focused on Trustworthy AI computing, responsible AI methodologies, and delivering right-respecting solutions. Our team with a keen interest in the intersection of social media, artificial intelligence, and cognitive warfare, we are acutely aware of the profound impact of these domains on democratic societies. In this context, our objective is to provide an in-depth analysis of how cognitive warfare, information warfare, and the propagation of misinformation affect the fabric of democratic societies. For example, our team found some disinformation about the Israel-Hamas conflict in Titok in early June.

This article used the Israel-Hamas war in 2023 to shed light on the use of artificial intelligence in unveiling the intricate web of influence campaigns that precede and accompany armed conflicts. This study investigates how various state and non-state actors strategically disseminate cognitive warfare and information operations in the digital sphere before and during conflicts. The analysis delves into the manipulation of suspicious accounts across diverse social media platforms and the involvement of foreign entities in shaping the information landscape.

This research’s findings are insightful and carry significant implications for national security, counter-terrorism efforts, and cognitive warfare strategies. Understanding the dynamics of cognitive and information warfare is paramount in countering external influences and safeguarding the integrity of democratic processes. This study serves as a foundation for future endeavors to develop observation indicators for counter-terrorism and cognitive warfare, ultimately contributing to the preservation of democratic values and the well-being of societies.

In a world where information is a potent weapon, this research endeavors to unveil the intricate strategies at play in the digital realm, shedding light on the dynamics that can potentially shape the future of democratic societies. For example, an authoritarian regime, such as China is using generative AI to manipulate public affairs globally, especially in democratic societies [1,2]. With this foundation, we embark on a journey to comprehend, analyze, and counter the evolving landscape of cognitive warfare and information operations.

Methodology

Taiwan AI Labs uses our analytical software “Infodemic” for investigating information operations toward multiple social media platforms. The detailed algorithm information is below

Analytics data coverage and building similarity nodes between user accounts

1. Analytics data

In the scope of this research, we conducted a comprehensive analysis of 71,774 dubious user accounts sourced from a diverse array of social media platforms, including YouTube, X (Twitter), and the most significant online forum in Taiwan, PTT. We organized these suspicious accounts into 9,737 distinct coordinated groups using an advanced user clustering algorithm.

The central aim of this scholarly investigation was to delve into the strategies employed by these coordinated groups in the realm of information manipulation, with a particular focus on their activities related to the Israel-Hamas war in 2023. Our analytical efforts encompassed the scrutiny of 6,942 news articles, 63,264 social media posts, and 942,205 comments published between August 10–October 10, 2023.

2. Analysis Pipeline

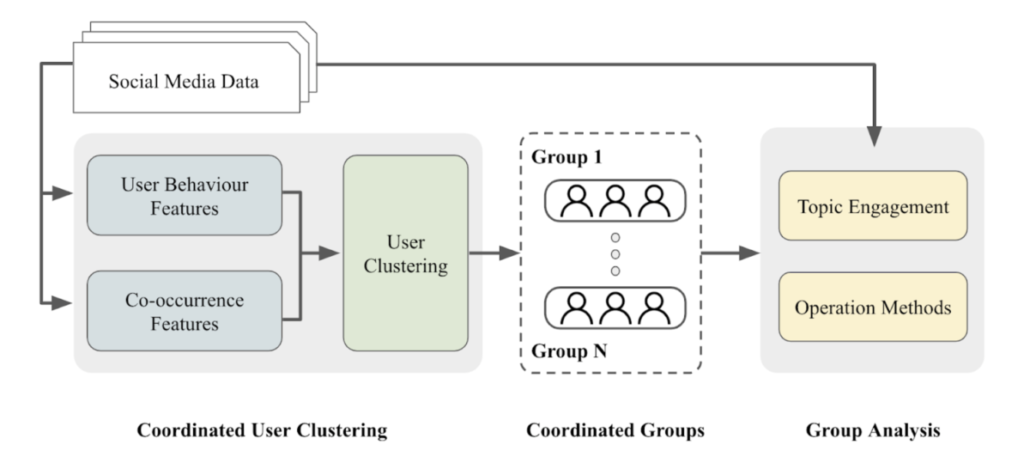

Figure 1: An overview of the coordinated behavior analysis pipeline

Figure 1 illustrates the analysis pipeline of this report, consisting of three components:

- User Features Construction: We analyze and quantify the behavioral characteristics of a given user and transform the user features as user vectors.

- User Clustering: Leveraging the user vectors, we build a network of related users and apply a community detection algorithm to identify highly correlated user groups,and categorize them as collaborative entities for further analysis.

- Group Analysis: We delve into the operational strategies of these collaborative groups, examining aspects such as the topics they engage with, their operation methods, and their tendencies to support or oppose specific entities.

In the subsequent sections, we will provide detailed explanations of each of these components.

User Feature Construction

To capture user information on social forums effectively, we propose two feature sets:

- User Behaviour Features

Data preparation for user behavior features is a critical step in extracting meaningful insights from the given dataset, which encompasses a wide range of information related to social posts (or videos) and user interactions.

We collected a broad spectrum of raw social data, which was then transformed into a series of columns representing user behavior features, such as the ‘destination of user interactions’ (post_id or video_id), the ‘time of user actions’, and the ‘domain of shared links by users’, and so forth. These user behavior features will be further transformed and organized for use in user similarity evaluation and clustering.

- Co-occurrence Features

The purpose of co-occurrence features is to identify users who frequently engage with the same topics or respond to the same articles. We employ Non-Negative Matrix Factorization (NMF) to quantify co-occurrence features among users.

NMF is a mathematical technique used for data analysis and dimensionality reduction by decomposing a given matrix into two or more matrices in a way that all elements in these matrices are non-negative.

Specifically, to construct the features for M users and N posts, we build an M * N dimensional relationship matrix to record each user’s engagement with various posts. Subsequently, we apply NMF to decompose this matrix, and we utilize the obtained user vectors as co-occurrence features for each user.

User Clustering

- User Similarity Evaluation

After completing the construction of user features, our next step involves evaluating the coordinated relationships between users. For behavioral features, we compare various behaviors between user pairs and normalize the comparison result to a range between 0 and 1. For instance, concerning user activity times, we record the activity times for each user within a week as a 7×24-dimensional matrix. We then calculate the cosine similarity between pairs of users based on their activity times. In the case of co-occurrence features, we use cosine similarity to assess the similarity between co-occurring vectors of users.

By computing the cosine of the inclination between these vectors, we can deduce the level of similarity between user response or their actions. This tactic is notably useful in the study of social media, where it permits the collection of users according to common behavior patterns.[3] Users who have analogous cosine similarity demonstrate a highly coordinated pattern of behavior.

- User Clustering

After constructing pairwise similarities among users based on their respective features, for user pairs with a similarity exceeding a predefined threshold, we establish an edge between them, thereby creating a user network. Subsequently, we applied Infomap for clustering of this network. Infomap is an algorithm for identifying community structures within networks using the information flow. The detected community in this network would be considered as coordinated groups, in the following sections.

Group Analysis

- Opinion Clustering

To efficiently understand the narratives proposed by each user group, we employed a text-cluster grouping technique on comments posted by coordinated groups. Specifically, we leveraged a pre-trained text encoder to convert each comment into vectors and applied a hierarchical clustering algorithm to cluster relevant posts into the same group, which would be used in the following analysis. - Stance Detection and Narrative Summary

Large Pretrained Language Models have demonstrated its utility in extracting entities mentioned within textual content while simultaneously providing relevant explanations. [5] This capability contributes to the comprehension of pivotal elements within the discourse, particularly in understanding how comments and evaluations impact these identified entities.

In this report, we use Taiwan LLaMa for text analysis. Taiwan LLaMa is a large language model pre-trained on native Taiwanese language corpus. After evaluation, it has shown remarkable proficiency in understanding Traditional Chinese, and it excels in identifying and comprehending Taiwan-related topics and entities. To be more specific, we leverage Taiwan LLaMa to extract vital topics, entities, and organizational names from each comment. Furthermore, we request it to assess the comment author’s stance on these entities, categorizing them as either positive, neutral, or negative. This process is applied to all opinion clusters.

Finally, we would calculate the percentage of each main topic/entity mentioned in the opinion group, the percentage of positive/negative sentiment associated with each topic/entity, and generate summaries for each opinion cluster using LLM for facilitating data analysts in grasping the overall picture of the event efficiently.

Result

In this incident, we discovered that there’s a different concentration of information manipulation patterns from troll groups before and after the Hamas attack on October 7th:

The manipulation pattern before the Hamas attack

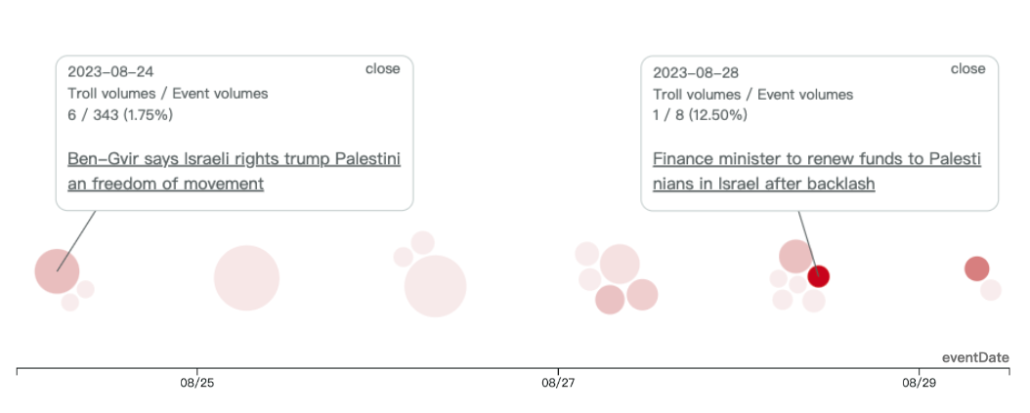

Tracing back to August 24th, there’s an event where Israel’s Far-Right national security Minister, Itamar Ben-Gvir stated that Israeli rights trump Palestinian freedom of movement. Ben-Gvir has admitted that his right to move around unimpeded is superior to the freedom of movement for Palestinians in the occupied West Bank, sparking outrage.

Figure 2: A beeswarm plot showing the timeline of the stories after ‘Ben-Gvir says Israeli rights trump Palestinian freedom of movement.’

* Each Circle Represents an Event related to this manipulated story

** The Size of each circle is defined by the sum of the social discussion of that Event

*** The Darker the circle is, the Higher the proportion of troll comments in the Event

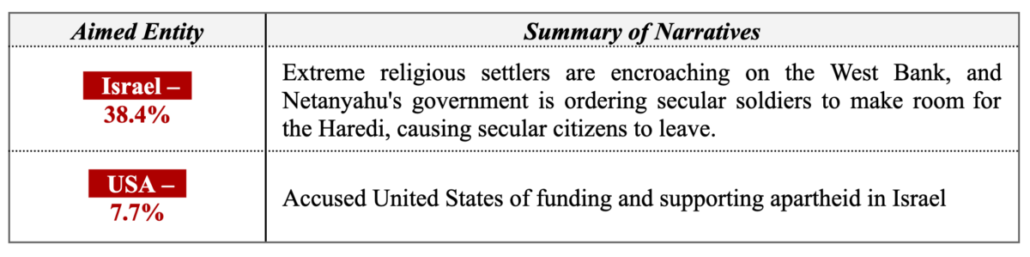

This study clustered the manipulated comments from the coordinated troll groups into narratives on the story events above. It was then discovered that Israel was the most manipulated entity, being swayed into a negative light, with accusations pointing towards Israel as the culprit behind the tragedy in the West Bank. On the other hand, as the USA funded and supported Israel, coordinated troll groups also accused the USA of encouraging Israel’s apartheid policy.

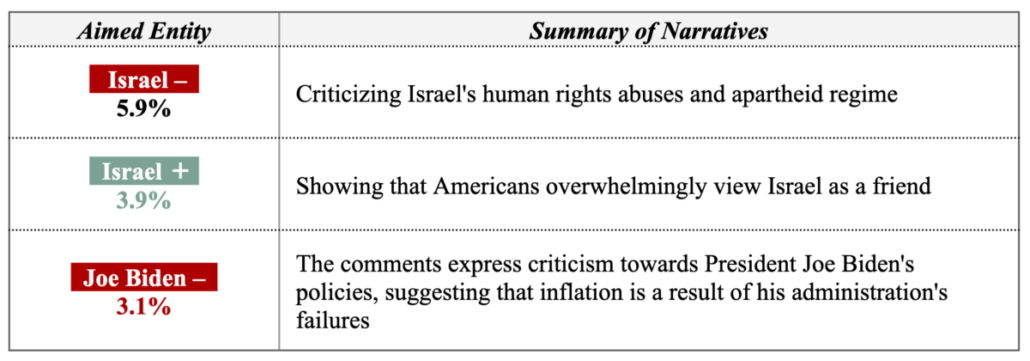

Table 1: Aimed entities and summary of narratives manipulated by coordinated troll groups in August.

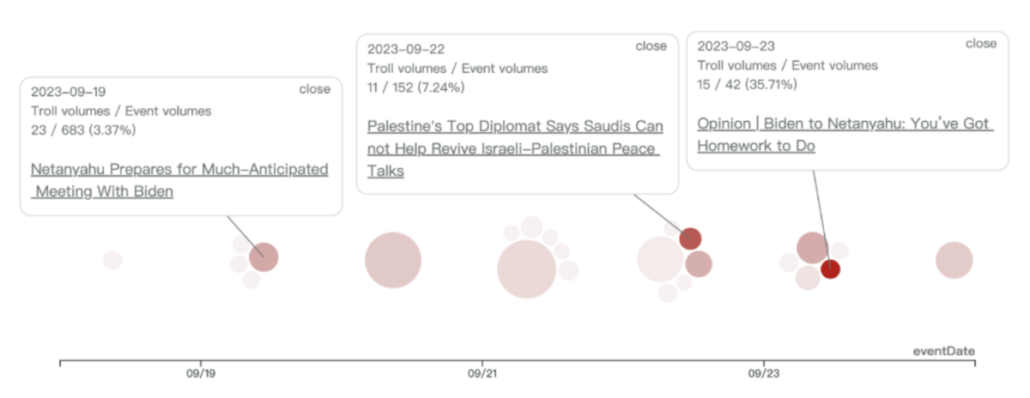

On September 19th, Israeli Prime Minister Benjamin Netanyahu arrived in the U.S. for a meeting with President Joe Biden amid unprecedented demonstrations in Israel against a planned overhaul of Israel’s judicial system. Also on the agenda is a possible U.S.-brokered deal for normalization between Israel and Saudi Arabia. This study also discovered a series of manipulations from troll groups.

Figure 3: A beeswarm plot showing the timeline of the stories after ‘Netanyahu Prepares for Much-Anticipated Meeting With Biden.’

* Each Circle Represents an Event related to this manipulated story

** The Size of each circle is defined by the sum of the social discussion of that Event

*** The Darker the circle is, the Higher the proportion of troll comments in the Event

In these events, the study identified two contrasting manipulation methods: one involving criticism of Israel and Joe Biden, and the other showing support for Israel, reflecting the overwhelming American support for the country. Regarding the majority of manipulations, coordinated troll groups primarily focused on accusing Israel of human rights abuses and operating an apartheid regime in the Israeli-Palestinian conflict. These groups also criticized Joe Biden for handling the incident, attributing it to administrative incompetence and causing a domestic economic downturn.

Table 2: Aimed entities and summary of narratives manipulated by coordinated troll groups in September

Contrary to the Hamas attack on October 7th, this study revealed that coordinated troll groups manipulated and blamed Israel for its apartheid policies and encroachment on the West Bank in August and September. This manipulation may further justify the fact that Israel is currently under attack, viewing it through the lens of information manipulation by these coordinated troll groups. Information manipulation could be a leading indicator for future conflicts.

These evidences revealed that coordinated troll groups manipulated and blamed Israel for its apartheid policies and encroachment on the West Bank in August and September. This manipulation may further justify the fact that Hamas attacked Israel on October 7th. Information manipulation by coordinated troll groups could be a leading indicator for signs of incoming conflicts in the future.

The manipulation pattern after the Hamas attack

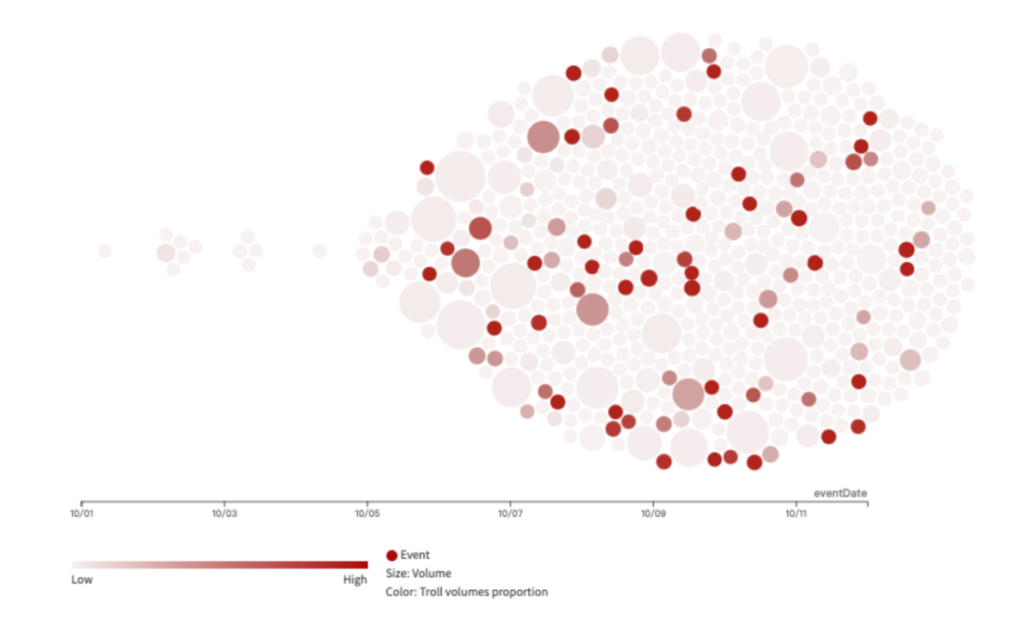

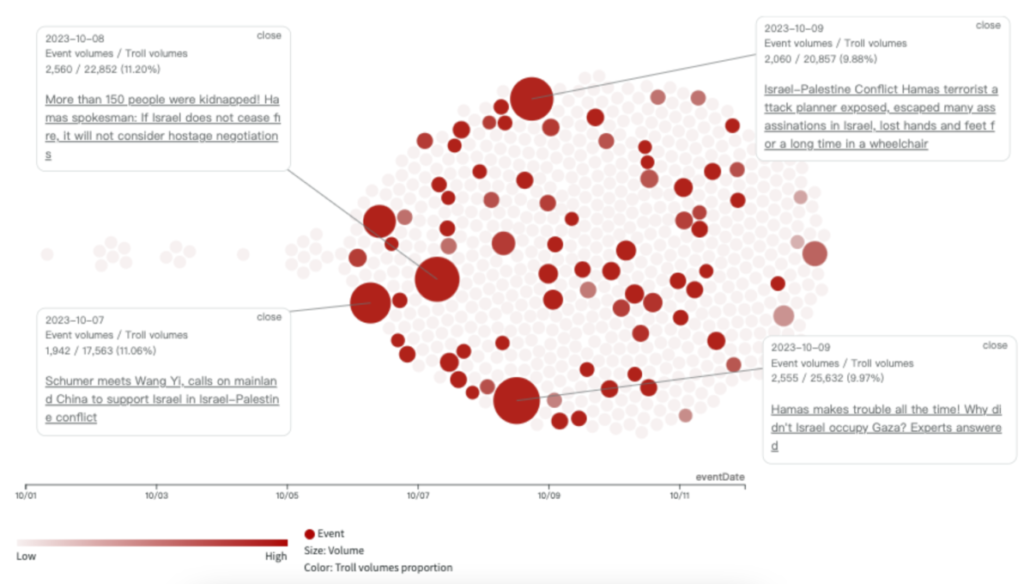

AI Labs consolidates similar news articles into events using AI technology and visually represents these events on a timeline using a beeswarm plot. Within this representation, each circle signifies the social volume of an event. The color indicates the percentage of troll activity, with darker shades signifying higher levels of coordinated actions.

Following the incident on October 7, the event timeline is depicted in the figure below. Notably, after Hamas’ attack on Israel, there was a significant spike in activity, with varying degrees of coordinated actions across numerous events.

Figure 4: A beeswarm plot showing the timeline of the story events of Hamas attack

* Each Circle Represents an Event related to this manipulated story

** The Size of each circle is defined by the sum of the social discussion of that Event

*** The Darker the circle is, the Higher the proportion of troll comments in the Event

AI Labs categorizes these events based on different social media platforms, with the analysis as follows:

Case study 1: YouTube

From the data AI Labs collected on YouTube, there are 497 videos with 175,072 comments. Among these, 681 comments are identified as coming from troll accounts, accounting for approximately 0.389% of the total comments. There are 64 distinct troll groups involved in these operations.

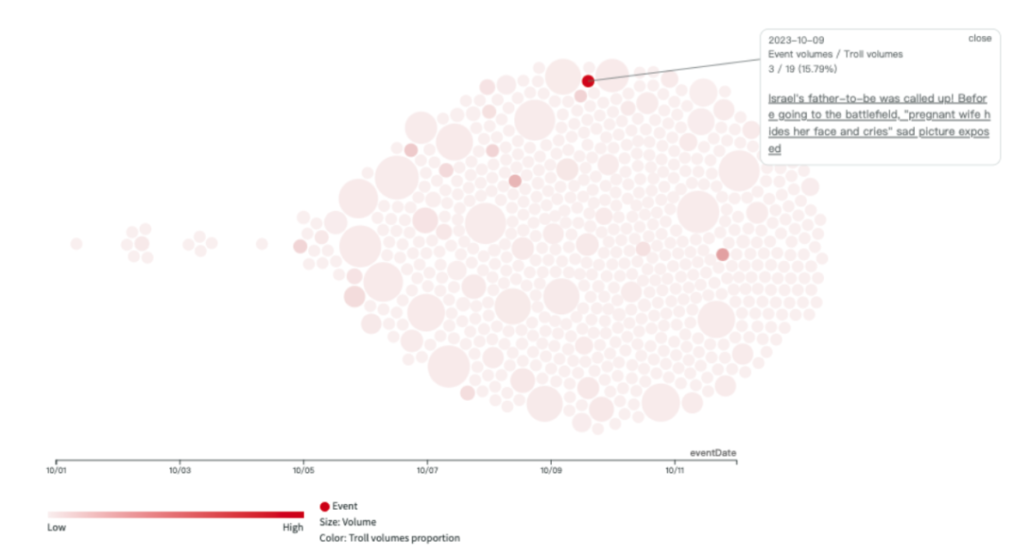

The timeline for activity on the YouTube platform is as follows. The most manipulated story was “Israel’s father-to-be was called up! Before going to the battlefield, ‘the pregnant wife hides her face and cries,’ the sad picture exposed.” For this event, the level of troll activity on the YouTube platform was 15.79%.

Figure 5: A beeswarm plot showing the timeline of the most manipulated story on YouTube.

* Each Circle Represents an Event related to this manipulated story

** The Size of each circle is defined by the sum of the social discussion of that Event

*** The Darker the circle is, the Higher the proportion of troll comments in the Event

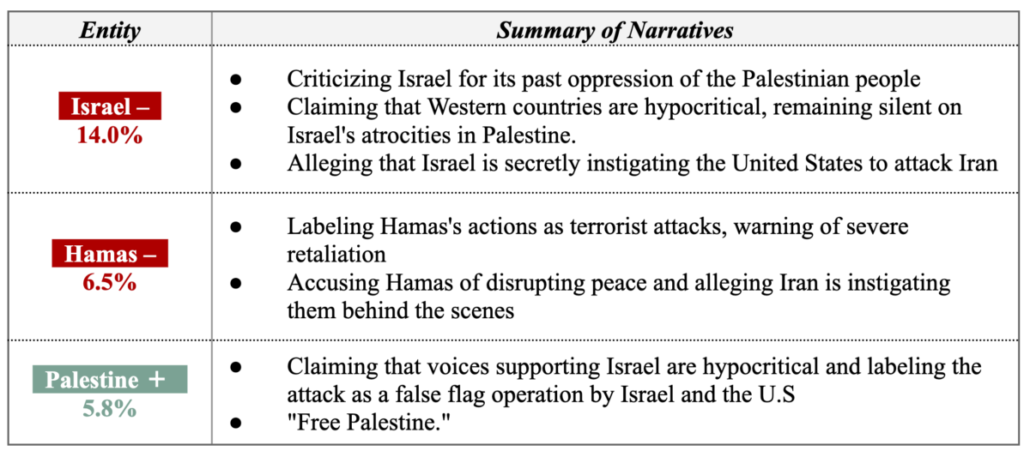

Utilizing advanced AI analytics, AI Labs conducted an in-depth examination of the actions taken by certain coordinated online groups. The analysis revealed that the primary focuses of these operations encompass subjects like Israel, Hamas, and Palestine. Specifically:

14% of the troll narratives aim to attack Israel, encompassing criticisms related to its historical transgressions against the Palestinian populace. Within this discourse, there is also a prevalent assertion that Western nations display a marked hypocrisy by turning a blind eye to Israel’s endeavors in Palestine. Further, there’s an emergent speculation insinuating Israel’s clandestine endeavors to instigate U.S. aggression towards Iran.

6.5% of the troll narratives direct attention to attacking Hamas. The predominant narratives label Hamas’s undertakings as acts of terrorism, accompanied by forewarnings of potential robust retaliations. Additionally, these narratives implicate Hamas in destabilizing regional peace, with indirect allusions to Iran as the potential puppeteer behind the scenes.

5.8% of the troll narratives demonstrate a clear pro-Palestine stance. Within this segment, there’s a prevalent contention that suggests that narratives supporting Israel are double standard. Moreover, there’s an emerging narrative painting the conflict as a collaborative false flag orchestrated by Israel and the U.S. It’s noteworthy to mention that the slogan “Free Palestine” frequently punctuates these expressions.

Table 3: Aimed entities and summary of narratives manipulated by coordinated troll groups on YouTube

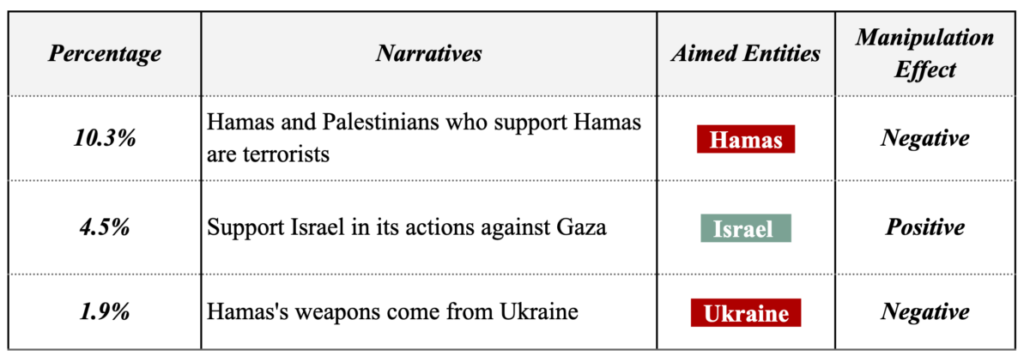

In addition to the aforementioned, AI Labs utilized sophisticated clustering algorithms to discern predominant narratives emanating from these troll accounts. Preliminary findings indicate that on the YouTube platform, 10.3% of these narratives uphold the sentiment that “Hamas and Palestinians who endorse Hamas are categorically terrorists.” Meanwhile, 4.5% overtly endorse Israel’s tactical responses against Gaza. Of significant interest is the 1.9% narrative subset suggesting that “Hamas’s armaments are sourced from Ukraine”—a narrative intriguingly resonant with positions articulated by Chinese state-affiliated media outlets.

Table 4: Percentage of narratives and aim entities manipulated by coordinated troll groups on YouTube.

Case study 2: X(Twitter):

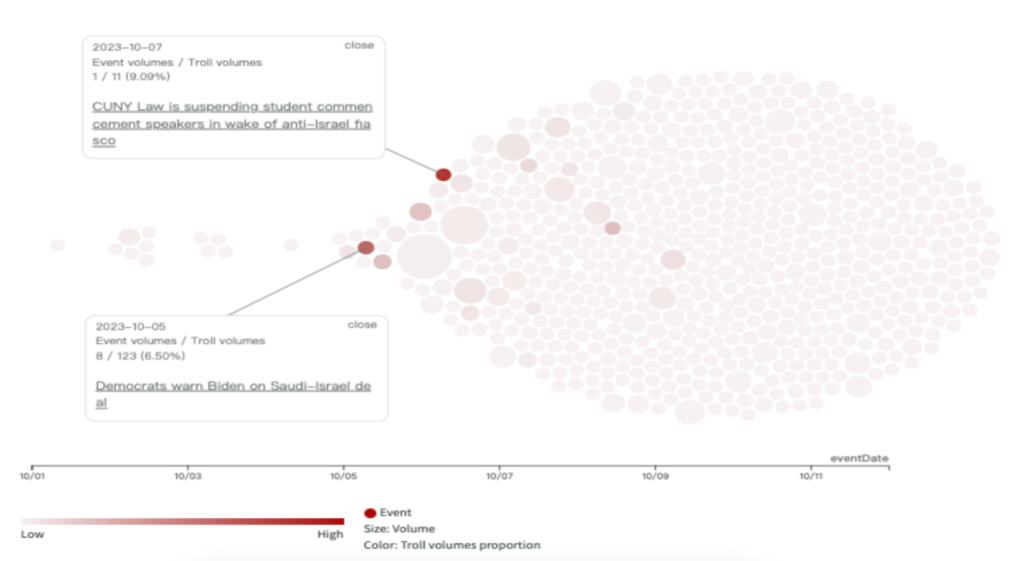

AI Labs curated a dataset comprising 8,650 tweets and 61,568 associated replies. Within this corpus, replies instigated by the troll groups totaled 295, constituting approximately 0.479% of the overall commentary volume. In total, there were 34 distinguishable troll groups actively operational.

The timeline derived from the Twitter platform is presented subsequently. The events subjected to the most pronounced troll activities were “CUNY Law’s decision to suspend student commencement speakers following an anti-Israel debacle” and “Democrats cautioning Biden concerning the Saudi-Israel accord,” accounting for 9.09% and 6.50% of troll operations, respectively.

Figure 6: A beeswarm plot showing the timeline of the story events of Hamas attack on X(Twitter).

* Each Circle Represents a Event related to this manipulated story

** The Size of each circle defined by the sum of the social discussion of that Event

*** The Darker the circle is, the Higher the proportion of troll comments in the Event

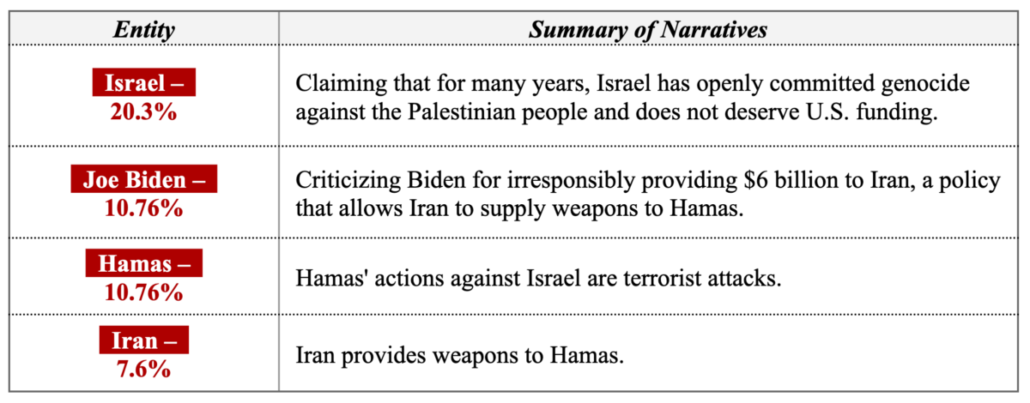

In a comprehensive analysis conducted by AI Labs, troll groups on X(Twitter) were observed to predominantly focus their efforts on Israel, Biden, Hamas, and Iran. The narrative against Israel accounted for 20.3% of the total discourse, emphasizing the following:

20.3% of the troll narratives against Israel emphasizing that Israel has systematically perpetrated what can be characterized as genocide against the Palestinians, rendering it undeserving of financial backing from the U.S.

10.76% of the troll narratives attributed to attack Joe Biden, about that Biden’s allocation of $6 billion to Iran reflects fiscal and strategic imprudence, further suggesting that this policy facilitates Iran’s provision of arms to Hamas.

10.76% of the troll narratives(理由同前) attack Hamas, with the core narrative characterizing Hamas’ actions against Israel as acts of terrorism. Critiques against Iran made up 7.6%, predominantly accusing it of being a principal arms supplier to Hamas.

Table 5: Aimed entities and summary of narratives manipulated by coordinated troll groups on X(Twitter)

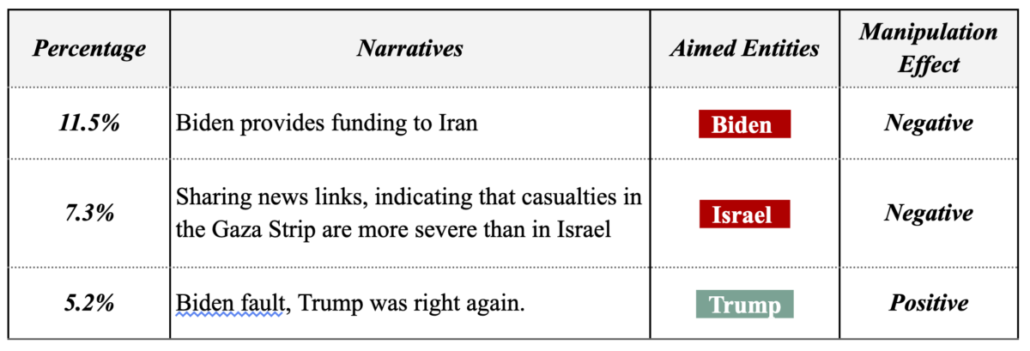

Leveraging clustering methodologies, AI Labs identified dominant narratives among these troll groups on X(Twitter). Approximately 11.5% of troll replies focused on the theme “Biden’s Financial Backing to Iran”, overtly criticizing Biden’s foreign policy decisions. An additional 7.3% of replies contained links underscoring the alleged disparity in casualty rates between the Gaza Strip and Israel. Moreover, 5.2% of the troll replies appeared to advocate for Trump’s Middle Eastern policies, positioning them as judicious and effective compared to current strategies.

Table 6: Percentage of narratives and aim entities manipulated by coordinated troll groups onX(Twitter).

Case study 3: PTT

PTT is a renowned terminal-based bulletin board system (BBS) in Taiwan. Our team extracted data from 312 pertinent posts and 62,308 comments on PTT. Of these comments, those attributed to coordinated groups totaled 3,613, representing 5.8% of the overall comment volume. In total, there were 110 troll groups actively participating in discussions.

The temporal analysis on PTT reveals four major incidents with significant user engagement and heightened levels of coordinated activity. These are:

“Schumer meets Wang Yi, urges Mainland China to bolster Israel amidst the Israel-Palestine clash,” with troll activity accounting for 11.06%.

“Over 150 individuals abducted! Hamas spokesperson: Without Israel’s ceasefire, hostage negotiations remain off the table,” exhibiting a troll activity rate of 11.20%.

“Persistent provocations by Hamas! What deters Israel from seizing Gaza? Experts weigh in,” with a troll activity proportion of 9.97%.

“In the Israel-Palestine Confrontation, the mastermind’s identity behind Hamas’s terrorist attacks is revealed. Having evaded numerous assassination attempts in Israel, he has been physically incapacitated and wheelchair-bound for an extended period,” which saw a troll activity rate of 9.88%.

Figure 7: A beeswarm plot showing the timeline of the story events of Hamas attack on PTT

* Each Circle Represents a Event related to this manipulated story

** The Size of each circle defined by the sum of the social discussion of that Event

*** The Darker the circle is, the Higher the proportion of troll comments in the Event

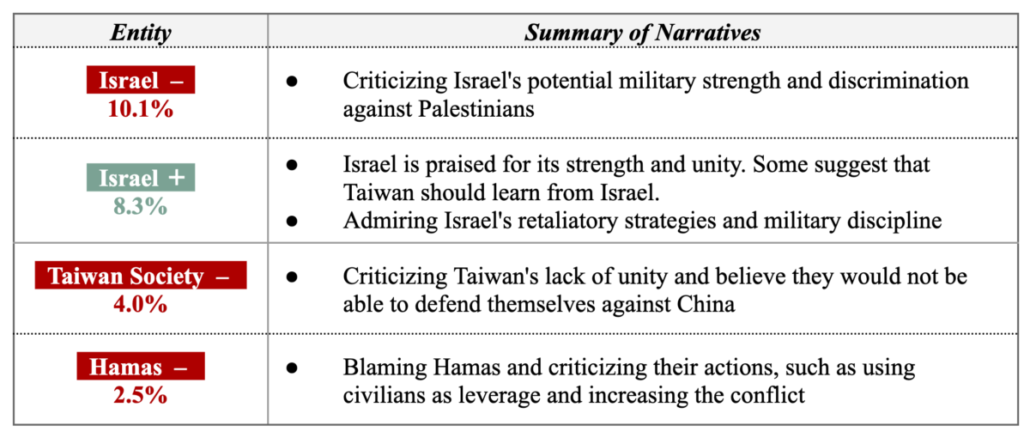

Utilizing AI, AI Labs delved into the narratives of troll accounts on PTT, identifying their primary targets as Israel, the Taiwanese society, and Hamas. Regarding Israel, PTT trolls discourse bifurcates into supportive and critical stances. Critical narratives against Israel constitute 10.1%, predominantly highlighting criticisms of Israel’s purported military prowess and alleged discrimination against Palestinians. On the other hand, trolls discourse championing Israel represent 8.3%, primarily lauding Israel for its robustness and solidarity. Some voices even suggest that Taiwan could draw lessons from Israel, admiring Israel’s retaliatory methodologies and military discipline.

Given PTT’s status as a localized Taiwanese community platform, it’s unsurprising that discussions also encompass the Taiwanese societal context. A notable 4% of the troll narratives is laden with critiques regarding Taiwan’s perceived lack of unity and skepticism over its capacity to withstand potential Chinese aggression. Meanwhile, 2.5% of troll narratives are atack Hamas, primarily decrying their tactics—like utilizing civilians as bargaining chips—which arguably escalate hostilities.

Table 7: Aimed entities and summary of narratives manipulated by coordinated troll groups on PTT

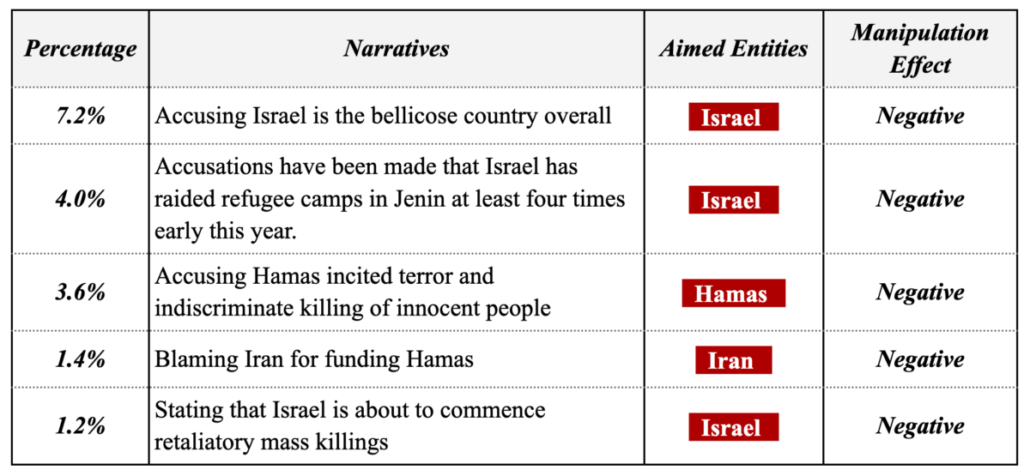

For narrative categorization, AI Labs employed clustering techniques to classify troll group discussions. Notably, 7.2% of discourse content pointed fingers at Israel, painting it as predominantly belligerent. Additionally, 4% highlighted accusations against Israel for allegedly conducting multiple raids on refugee camps in Jenin earlier that year, while 1.2% of the narrative indicated impending large-scale retaliatory actions by Israel. These threads predominantly hold Israel in a critical light. Conversely, 3.6% of discussions condemned Hamas for allegedly perpetrating terror and indiscriminate civilian harm, whereas 1.4% blamed Iran for purportedly financing Hamas.

Table 8: Percentage of narratives and aim entities manipulated by coordinated troll groups on PTT

Regarding to YouTube, troll entities seem to bolster their engagement and visibility by posting comments that echo narratives familiar to Chinese state-affiliated media, such as claims that “Hamas procures weapons from Ukraine” or that “China can serve as a peace-broker.”

In addition, troll accounts on X(Twitter) appear to leverage the Israel-Palestine conflict as a fulcrum to agitate domestic political discussions, predominantly spotlighting criticisms against Biden’s Iran funding policy.

Figure 8: Troll accounts comment under media tweets to agitate domestic political discussions, predominantly spotlighting criticisms against Biden’s Iran funding policy.

In the case of the PTT, the most pronounced troll narratives seem to portray Israel as inherently aggressive, allegedly implicating them in early-year refugee camp raids. Interestingly, diverging from tactics observed on other platforms, troll entities on PTT chiefly underscore perceived fractures in Taiwanese societal cohesion and purported vulnerabilities against potential Chinese aggression.

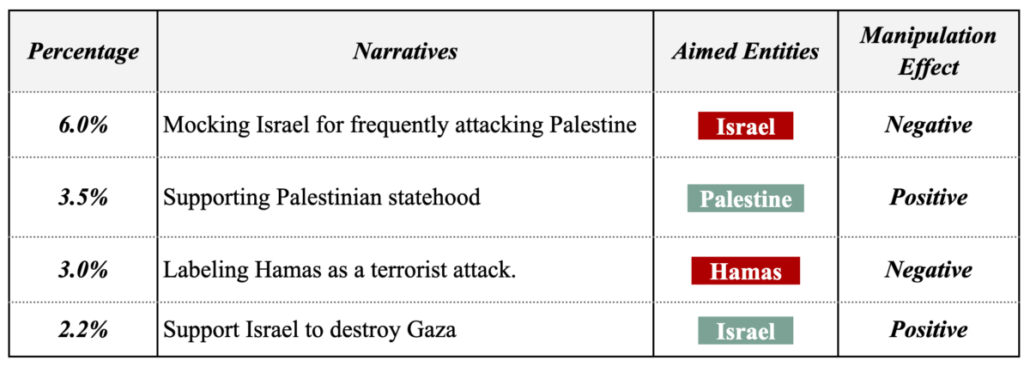

Aggregating troll narratives across platforms, we discern that 6% of discussions mock Israel for its purported frequent aggressions against Palestine. Approximately 3.5% advocate for Palestinian statehood, and 3.0% categorize Hamas operations as terroristic. In contrast, 2.2% of discourses support Israel’s proposed actions.

Table 9: Percentage of narratives and aim entities manipulated by coordinated troll groups on all platforms

Chinese state-affiliated media’s narratives and troll operations

AI Labs analyzed news from Chinese state-affiliated media outlets and observed that certain articles experienced extensive re-circulation within China. On October 8th, the Chinese Ministry of Foreign Affairs responded to the incident where Hamas attacked Israel, urging an immediate ceasefire to prevent further escalation of the situation. Later that day, a video titled “The Israel-Palestine War Has Begun! Who is the Hidden Hand Behind the Israel-Palestine Conflict?”(《經緯點評》以巴開戰了!谁是以巴戰爭的幕後黑手?) was released on the YouTube channel operated by David Zheng. This video echoed the sentiments expressed by the Chinese Ministry of Foreign Affairs, suggesting that “China can serve as a mediator between Israel and Palestine, becoming a peacemaker, thus positioning itself to have greater influence in the world.” There was noticeable activity by troll groups in the comments section of this video, aiming to amplify its reach and propagate its viewpoints.

Figure 9: Statement from the Chinese Ministry of Foreign Affairs and the YouTube video echo the narrative.

Figure 10: Troll accounts comment under the video “The Israel-Palestine War Has Begun! Who is the Hidden Hand Behind the Israel-Palestine Conflict?”(《經緯點評》以巴開戰了!谁是以巴戰爭的幕後黑手?) , aiming to amplify its reach and propagate its viewpoints.

* The comment with background means the commenter is troll account, same color means they are in same group.

On October 10th, the Chinese Global Times cited a news piece from the Russian state-affiliated media Russia Today, which quoted Florian Philippot, the chairman of the French Patriot Party. The article highlighted allegations that weapons supplied by the US to Ukraine had surfaced in the Middle East. It was now used in violent confrontations, with the quantities being “vast!” This claim has been confirmed as false by fact-checking organization NewsGuard Technologies. This narrative also manifested within the discourse of troll groups on YouTube. About 1.9% of the discussions echoed this news item propagated by Chinese state-affiliated media on behalf of Russia. AI Labs postulates that this narrative might be a dissemination effort by China on behalf of Russia, aimed at undermining support for Ukraine from the US and its Western allies.

In early June 2023, Dr. Jung-Chin Shen, an Associate Professor at York University in Canada, observed that numerous users were discussing the Israel-Palestine conflict within the Chinese territory on the platform Douyin. In their discourse, Israel appeared to be consistently retreating, suggesting that Palestine seemed to be on the verge of achieving victory in the war.

Figure 11: In early June 2023, screenshots of videos related to the Israel-Palestine conflict on Douyin. (Source: Jung-Chin Shen’s Facebook)

Discussion

The findings of this article indiacte that cognitive warfare and information operations are becoming increasingly sophisticated and are being used to achieve a broader range of objectives. The research also indicates that information manipulation could be a pilot indicator for future conflicts.

Specifically, the evidence showed that troll groups manipulated by blaming Israel for their apartheid policy and encroaching on the West Bank in Aug & Sep 2023, right before the conflict began. This suggests that information manipulation was used to sow discord and weaken public trust in the Israeli government, which may have contributed to the outbreak of the conflict.

In addition, the research indicated that cognitive warfare and information operations were used to sow discord and weaken public trust in institutions. These tactics were used to exacerbate tensions between Israelis and Palestinians and to undermine the credibility of the Israeli and Palestinian governments.

These findings have implications for the future of cognitive warfare and information operations. Governments and organizations need to be aware of the threat posed by these tactics and develop strategies to combat them.

This research has implications for the future of cognitive warfare and information operations. The findings suggest that these tactics are becoming more sophisticated and used to achieve a broader range of objectives. The research also suggests that developing new strategies to combat these threats is essential. Future research can investigate how foreign actors, including Iran, Russia, and China, were involved in the conflict. These actors used social media to spread disinformation and propaganda supporting their interests.

This research is still in its early stages, but it has the potential to significantly contribute to our understanding of cognitive warfare and information operations. The findings of this research could be used to inform the development of new strategies to combat these threats and to protect democratic societies from their harmful effects.

Reference

[1]Tucker.P.(2023). How China could use generative AI to manipulate the globe on Taiwan. Defense One. https://www.defenseone.com/technology/2023/09/how-china-could-use-generative-ai-manipulate-globe-taiwan/390147/

[2] Beauchame-Mustafaga & Macllino.(2023). The U.S. Isn’t Ready for the New Age of AI-Fueled Disinformation—But China Is. Time. https://time.com/6320638/ai-disinformation-china/

[3] Al-Otaibi, S., Altwoijry, N., Alqahtani, A., Aldheem, L., Alqhatani, M., Alsuraiby, N., & Albarrak, S. (2022). Cosine similarity-based algorithm for social networking recommendation. Int. J. Electr. Comput. Eng, 12(2), 1881-1892.

[4] Lee, D. D., & Seung, H. S. (2001). Algorithms for non-negative matrix factorization. In Advances in neural information processing systems (Vol. 13, pp. 556-562).

[5] Covas, E. (2023). Named entity recognition using GPT for identifying comparable companies. arXiv preprint arXiv:2307.07420.