The Magic to Disappear Cameraman: Removing Object from 8K 360° Videos

360° video, also known as immersive video, has been increasingly popular and drawn great attention nowadays since it unlocks unlimited possibilities for content creators and encourages viewer engagement. One representative application which exploits the power of 360° videos is Taiwan Traveler. Taiwan Traveler, Taiwan’s first smart tourism platform developed by Taiwan AI Labs, aims to use 360° videos to create immersive experiences for viewers by inviting them on a virtual journey to different scenic attractions in Taiwan. While 360° videos have plenty of advantages, there are some challenging tasks to be solved. One of the most critical drawbacks that hinder the user experience in 360° videos is the presence of the cameraman in the film. Since 360° videos capture views in every direction, there is no place for cameramen to hide. To alleviate this problem, we developed a cameraman removal algorithm by modifying existing video object segmentation and video inpainting methods to automatically remove the cameraman in 360° videos and thus enhance the visual quality.

Overview of Existing Methods

Removing unwanted objects in videos (video object removal) is a practical yet challenging task. There are two main methods to handle video object removal tasks. The first one is to manually edit every single frame by image-based object removal tools while the other one automatically edits the whole video by video object removal tools.

Manual Editing Single-Frame

There have been many image object removal tools such as healing brush, patch tool, and clone stamp tool, etc. However, it is difficult to extend these techniques to videos. Directly applying image object removal techniques to each frame of a video fails since it does not take temporal consistency into account. That is, although image object removal methods can hallucinate plausible content for each frame, the inconsistent hallucinated content between frames can be easily spotted by human eyes, and thus lead to poor user experience. To maintain video temporal consistency, the post-production crew needs to go through each frame and manually replace the unwanted objects with plausible content without breaking temporal consistency, which is both time-consuming and labor-intensive to achieve a good result.

Automatic Video Object Removal Approaches

Recently, Content-Aware Fill introduced by Adobe After Effects in 2019 is the most relevant feature of automatically removing unwanted objects in videos that we could find on the market. Nonetheless, Content-Aware Fill requires manually annotated masks for keyframes, and it can only produce good results while dealing with relatively small objects. Also, the processing speed of Content-Aware Fill is so slow that it is only recommended to be applied to short-term videos. To remove the cameraman in a long-term 360° video with little human effort, we proposed a cameraman removal algorithm that can automatically remove the cameraman in a given video with only “one” annotation mask required.

Our Methods

Figure 1: left: original image, middle: video masks generation result image, right: video inpainting result image.

In this task, our goal is to keep track of the cameraman in a video and replace the cameraman in each frame with plausible background textures in a temporally consistent manner. To achieve our goal, we separate this task into two main parts: 1) Video Masks Generation, and 2) Video Inpainting, where the first part aims to generate the mask of the cameraman in each frame, and the second part is responsible for filling in background textures to the masked region coherently.

Rotate Cameraman Up to Avoid Distortion Problem

Figure 2: top: equirectangular frame, down: rotated equirectangular (rotate up by 90°) frame. The red dotted lines refer to the regions of the cameraman to be removed.

Before introducing the methodologies in Video Masks Generation and Video Inpainting, it is noteworthy to mention that we performed a small trick on our video frames to bypass the distortion problem in equirectangular projection. As shown in Figure 2., the left image refers to the equirectangular projection of a frame in a 360° video, where we can see the cameraman in the frame is highly distorted. Distorted objects are extremely hard to be dealt with since most modern convolutional neural networks (CNNs) are designed for NFOV (normal field of view) images with no distorted objects in them. To mitigate this problem, we first project the equirectangular frame to a unit sphere, then we rotate the sphere up by 90° along the longitude and project it back to equirectangular projection. We refer the results of this transformation to rotated equirectangular frames. As shown in Figure 2. (right), the cameraman in the rotated equirectangular frame has almost no distortion, so that we can apply convolutional neural networks to it effectively.

By transforming equirectangular frames into rotated equirectangular frames, we not only eliminate cameraman distortion but simplify the following video processing steps. Let us introduce the methodologies behind Video Masks Generation and Video Inpainting.

Video Mask Generation

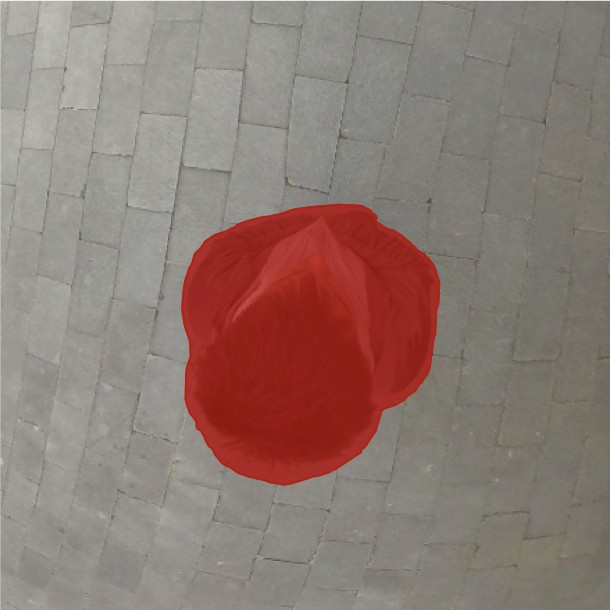

Figure 3: video masks generation result image.

To generate masks for cameramen, a video object segmentation algorithm proposed by Yang et al. [1] is applied. Given a specific frame’s manually annotated mask of the cameraman in the video, this algorithm will automatically keep track of the cameraman and generate accurate cameraman’s masks for the rest of the frames in the video.

While the algorithm works well in most cases, we observed three scenarios in which the video masks generation algorithm fails: cameraman’s feet appear from time to time, harsh lighting conditions, and similar appearance between background and cameraman’s clothing. In the first scenario, the cameraman’s feet do not always appear in every frame, hence it is difficult for our model to keep track of the feet. In the second scenario, harsh lighting conditions can drastically change the pixel values of the cameraman, which can cause inaccurate feature extractions and further affect the mask prediction. Lastly, in the third scenario, when the appearance of the cameraman’s clothing looks too similar to the background texture, it confuses our model, and it results in our model regarding a portion of the cameraman as the background.

Figure 4: failure cases in video masks generation. left: exposed cameraman’s feet, middle: harsh lighting condition, right: similar appearance between cameraman’s clothing and the background.

Video Inpainting

Figure 5: video inpainting result image.

As for video inpainting, we attempted to fill high-resolution content into the masked region with temporal consistency considered. Many trials have been made to overcome this challenging task. Applying image inpainting independently on each frame fails due to temporal inconsistency, and non-optical flow-based video inpainting approaches fail because of low resolution/blurry outcomes. The most convincing method is FGVC [2], an optical flow-based video inpainting algorithm that obtains the correlation between pixels in neighboring frames by calculating the optical flow between every two consecutive frames bidirectionally. By leveraging the information of pixel correlations, FGVC can fill the masked region by propagating pixels from the unmasked regions, which produces high resolution, temporal consistent results by nature.

However, there are two fatal issues in FGVC that prevent us from applying the algorithm to our videos directly. The first issue is the processing speed. Although the algorithm can process high-resolution input, it costs lots of time to finish one video, which is an infeasible approach if we still have lots of videos waiting in line. The second issue is that FGVC cannot handle long-duration videos due to limited CPU resources. The algorithm needs to store all the flow maps in a video into CPU memory, so there is a good chance that the computers run out of CPU memory if the video is too long.

Figure 6: Flow map comparison before and after removing the cameraman. left: original frame, middle: flow map of the original frame with the cameraman in it (the purple region indicates the cameraman’s flow is different from the background’s flow in green), right: completed flow map after removing the cameraman (fill the cameraman region with the smooth flow).

Figure 7: Comparison of cameraman removal results with and without Poison blending. left: original frame, middle: cameraman removal without Poisson blending, right: cameraman removal with Poisson blending.

To cope with the above issues, we made the following tweaks to the algorithm.

For the performance part, first, we only calculate the flow maps on a cropped, downsampled patch of each frame since the cameraman always locates around the middle of a frame. Second, after removing the cameraman’s flow in each flow map, we downsample each flow map again before completing the missing flow with the background flow. This is because we expect the background flow to be smooth and therefore won’t be affected by downsampling (Figure 6). Third, we removed the Poisson blending operation which costs lots of processing time in solving the optimization problem. Instead, we reconstruct the missing regions by directly propagating pixel values from the predicted flow maps, which can save lots of time with no obvious quality difference compared to the Poisson blending approach (Figure 7). Lastly, since we only reconstruct the missing regions in a cropped, downsampled patch of each frame, a super-resolution model [3] is used to upsample the inpainted patch back to high resolution without losing much visual quality.

As for the second issue, we developed a dynamic video slicing algorithm to fit hardware memory limits while ensuring video temporal consistency. The dynamic video slicing algorithm can dynamically schedule a slice of frames in a video at a time so that the entire inpainting process can run smoothly without running out of hardware memory. To ensure video temporal consistency between two consecutive slices, we use the last few inpainted frames as the guidance of the next slice. Therefore, the inpainting process in the next slice can make use of the inpainted regions’ information in the guidance frames to generate temporal consistent results with the previous slice. The slicing algorithm also makes sure the number of frames in each slice is the same so that the quality of each slice can stay consistent.

In addition to the adjustments we made mentioned above, we fixed an error of the algorithm taking the wrong masks during pixel reconstruction, which results in mistaken border pixels. By virtue of these modifications, our algorithm can now process an 8K long-term video with only one Nvidia 2080 Ti GPU while holding decent running speed.

Results

Figure 8: up: original frame (the cameraman in the frame is outlined by the red dotted line), down: cameraman removed frame.

Figure 8. shows the comparison of a frame before and after applying cameraman removal. As we can see, the cameraman together with its shadow in the bottom part of the frame is completely removed and filled with plausible background textures. The result significantly improves the video quality and thus brings unprecedented immersive experiences to viewers.

Figure 9: left: color shading, right: bad tracking result causes poor inpainting outcome (see the tracking result in Figure 4.-right).

Apart from the compelling results shown above, we discuss two potential issues of our cameraman removal algorithm: color shading and poor inpainting outcomes caused by bad tracking results. The color shading problem occurs since we fill the cameraman region by borrowing pixel “colors” from different frames with different lighting conditions using pixel correlations. Therefore, the brightness of the pixels we borrowed cannot fuse seamlessly. As for poor inpainting outcomes caused by bad tracking results, the problem happens due to the inaccurate masks generated in video mask generation. If the mask does not fully cover the cameraman in a frame, the exposed part of the cameraman may prevent us from obtaining accurate pixel correlations, which further harms the inpainting process and leads to unreliable inpainting results.

Conclusions

Our cameraman removal algorithm shows that deep learning models are capable of dealing with 360° high-resolution long-term videos, where each of these three properties can be one difficult computer vision task on its own. By applying some modifications to existing video mask generation and video inpainting methods, we can achieve compelling results. This algorithm can save tons of human labor, which traditionally takes several days for an annotator to remove the cameraman in just one video. Also, the algorithm is robust enough to be applied to real-world 360° virtual tour videos and generate pleasurable high-quality results. (see more results on Taiwan Traveler’s official website: https://tter.cc)

References

[1] Yang, Z., Wei, Y., & Yang, Y. (2020). Collaborative video object segmentation by foreground-background integration. In Proceedings of the European Conference on Computer Vision.

[2] Gao, C., Saraf, A., Huang, J.B., & Kopf, J. (2020). Flow-edge Guided Video Completion. In European Conference on Computer Vision.

[3] Wang, X., Yu, K., Wu, S., Gu, J., Liu, Y., Dong, C., Qiao, Y., & Loy, C. (2018). ESRGAN: Enhanced super-resolution generative adversarial networks. In The European Conference on Computer Vision Workshops (ECCVW).